Stellar inference speed via AutoNAS

- Por

- Episodio

- 149

- Publicado

- 7 sept 2021

- Editorial

- 0 Calificaciones

- 0

- Episodio

- 149 of 339

- Duración

- 42min

- Idioma

- Inglés

- Formato

- Categoría

- No ficción

Yonatan Geifman of Deci makes Daniel and Chris buckle up, and takes them on a tour of the ideas behind his amazing new inference platform. It enables AI developers to build, optimize, and deploy blazing-fast deep learning models on any hardware. Don’t blink or you’ll miss it!

Join the discussion

Changelog++ members save 2 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

RudderStack • – Smart customer data pipeline made for developers. RudderStack is the smart customer data pipeline. Connect your whole customer data stack. Warehouse-first, open source Segment alternative. SignalWire • – Build what’s next in communications with video, voice, and messaging APIs powered by elastic cloud infrastructure. Try it today at signalwire.com • and use code SHIPIT for $25 in developer credit. Fastly • – Our bandwidth partner. • Fastly powers fast, secure, and scalable digital experiences. Move beyond your content delivery network to their powerful edge cloud platform. Learn more at fastly.com Featuring:

• Yonatan Geifman – Website • , GitHub • , X • Chris Benson – Website • , GitHub • , LinkedIn • , X • Daniel Whitenack – Website • , GitHub • , X Show Notes:

DeciAn Introduction to the Inference Stack and Inference Acceleration TechniquesDeci and Intel Collaborate to Optimize Deep Learning Inference on Intel’s CPUsDeciNets: A New Efficient Frontier for Computer Vision ModelsWhite paper Something missing or broken? PRs welcome!

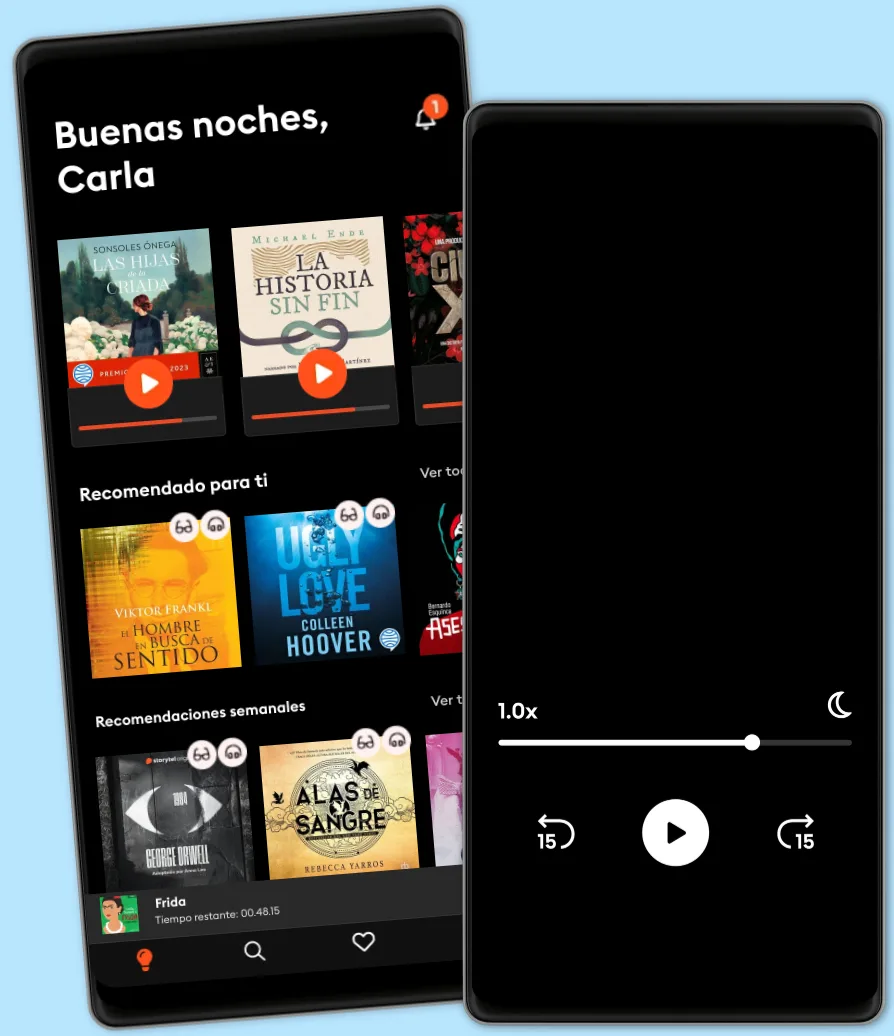

Escucha y lee

Descubre un mundo infinito de historias

- Lee y escucha todo lo que quieras

- Más de 1 millón de títulos

- Títulos exclusivos + Storytel Originals

- Precio regular: CLP 7,990 al mes

- Cancela cuando quieras

Otros podcasts que te pueden gustar...

- Un Libro Una HoraSER Podcast

- Hoy por HoySER Podcast

- Still Online - La nostra eredità digitaleBeatrice Petrella

- The DailyThe New York Times

- This American LifeThis American Life

- The Witch Trials of J.K. RowlingThe Free Press

- The Book ReviewThe New York Times

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- Diario Negreira

- A las bravasSER Podcast

- Un Libro Una HoraSER Podcast

- Hoy por HoySER Podcast

- Still Online - La nostra eredità digitaleBeatrice Petrella

- The DailyThe New York Times

- This American LifeThis American Life

- The Witch Trials of J.K. RowlingThe Free Press

- The Book ReviewThe New York Times

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- Diario Negreira

- A las bravasSER Podcast

Español

Chile