#1011 - Eliezer Yudkowsky - Why Superhuman AI Would Kill Us All

- Av

- Episod

- 1009

- Publicerad

- 25 okt. 2025

- Förlag

- 0 Recensioner

- 0

- Episod

- 1009 of 1017

- Längd

- 1T 37min

- Språk

- Engelska

- Format

- Kategori

- Personlig Utveckling

Eliezer Yudkowsky is an AI researcher, decision theorist, and founder of the Machine Intelligence Research Institute.

Is AI our greatest hope or our final mistake? For all its promise to revolutionize human life, there’s a growing fear that artificial intelligence could end it altogether. How grounded are these fears, how close are we to losing control, and is there still time to change course before it’s too late

Expect to learn the problem with building superhuman AI, why AI would have goals we haven’t programmed into it, if there is such a thing as Ai benevolence, what the actual goals of super-intelligent AI are and how far away it is, if LLMs are actually dangerous and their ability to become a super AI, how god we are at predicting the future of AI, if extinction if possible with the development of AI, and much more…

Sponsors:

See discounts for all the products I use and recommend: https://chriswillx.com/deals

Get 15% off your first order of Intake’s magnetic nasal strips at https://intakebreathing.com/modernwisdom

Get 10% discount on all Gymshark’s products at https://gym.sh/modernwisdom (use code MODERNWISDOM10)

Get 4 extra months of Surfshark VPN at https://surfshark.com/modernwisdom

Timestamps:

(0:00) Superhuman AI Could Kill Us All

(10:25) How AI is Quietly Destroying Marriages

(15:22) AI is an Enemy, Not an Ally

(26:11) The Terrifying Truth About AI Alignment

(31:52) What Does Superintelligence Advancement Look Like?

(45:04) Are LLMs the Architect for Superhuman AI?

(52:18) How Close are We to the Point of No Return?

(01:01:07) Experts Need to be More Concerned

(01:15:01) How Can We Stop Superintelligence Killing Us?

(01:23:53) The Bleak Future of Superhuman AI

(01:31:55) Could Eliezer Be Wrong?

Extra Stuff:

Get my free reading list of 100 books to read before you die: https://chriswillx.com/books

Try my productivity energy drink Neutonic: https://neutonic.com/modernwisdom

Episodes You Might Enjoy:

#577 - David Goggins - This Is How To Master Your Life: https://tinyurl.com/43hv6y59

#712 - Dr Jordan Peterson - How To Destroy Your Negative Beliefs: https://tinyurl.com/2rtz7avf

#700 - Dr Andrew Huberman - The Secret Tools To Hack Your Brain: https://tinyurl.com/3ccn5vkp

-

Get In Touch:

Instagram: https://www.instagram.com/chriswillx

Twitter: https://www.twitter.com/chriswillx

YouTube: https://www.youtube.com/modernwisdompodcast

Email: https://chriswillx.com/contact

- Learn more about your ad choices. Visit megaphone.fm/adchoices

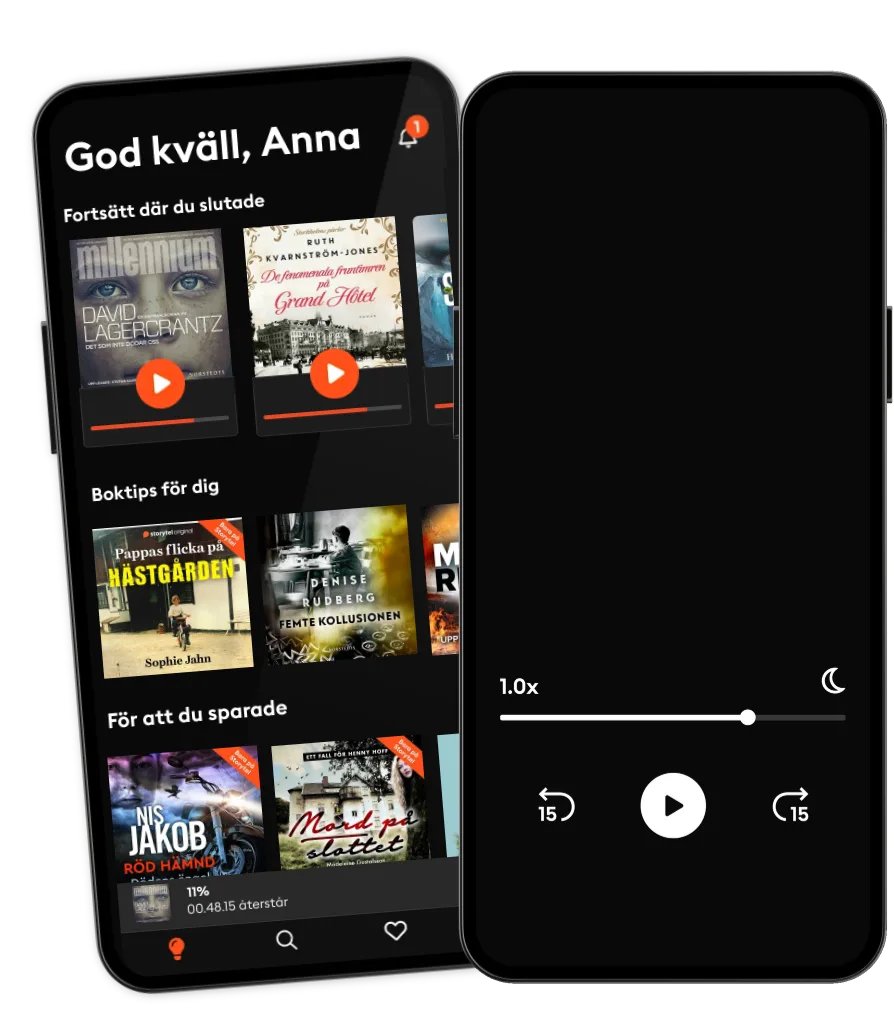

Lyssna när som helst, var som helst

Kliv in i en oändlig värld av stories

- 1 miljon stories

- Hundratals nya stories varje vecka

- Få tillgång till exklusivt innehåll

- Avsluta när du vill

Andra podcasts som du kanske gillar...

- Min historieALT for damerne

- Sotto pressione - Come uscire dalla trappola del burnoutAlessio Carciofi

- IgnifugheFederica Fabrizio

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- SEXPANELETEmma Libner

- VoksenkærlighedAmanda Lagoni

- PengekassenTine Gudrun Petersen

- Min historieALT for damerne

- Sotto pressione - Come uscire dalla trappola del burnoutAlessio Carciofi

- IgnifugheFederica Fabrizio

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- SEXPANELETEmma Libner

- VoksenkærlighedAmanda Lagoni

- PengekassenTine Gudrun Petersen

Svenska

Sverige