Jules Anh Tuan Nguyen Explains How AI Lets Amputee Control Prosthetic Hand, Video Games - Ep. 149

- By

- Episode

- 148

- Published

- Aug 11, 2021

- Publisher

- 0 Ratings

- 0

- Episode

- 148 of 258

- Duration

- 36min

- Language

- English

- Format

- Category

- Non-fiction

Path-breaking work that translates an amputee’s thoughts into finger motions, and even commands in video games, holds open the possibility of humans controlling just about anything digital with their minds.

Using GPUs, a group of researchers trained an AI neural decoder able to run on a compact, power-efficient NVIDIA Jetson Nano system on module (SOM) to translate 46-year-old Shawn Findley’s thoughts into individual finger motions.

And if that breakthrough weren’t enough, the team then plugged Findley into a PC running Far Cry 5 and Raiden IV, where he had his game avatar move, jump — even fly a virtual helicopter — using his mind.

It’s a demonstration that not only promises to give amputees more natural and responsive control over their prosthetics. It could one day give users almost superhuman capabilities.

The effort is detailed in a draft paper, or pre-print, titled “A Portable, Self-Contained Neuroprosthetic Hand with Deep Learning-Based Finger Control.” It details an extraordinary cross-disciplinary collaboration behind a system that, in effect, allows humans to control just about anything digital with thoughts.

Jules Anh Tuan Nguyen, the paper’s lead author and now a postdoctoral researcher at the University of Minnesota, spoke with NVIDIA AI Podcast host Noah Kravitz about his efforts to allow amputees to control their prosthetic limb — right down to the finger motions — with their minds.

blogs.nvidia.com/blog/2021/08/10/lending-a-helping-hand-jules-anh-tuan-nguyen-on-building-a-neuroprosthetic

Listen and read

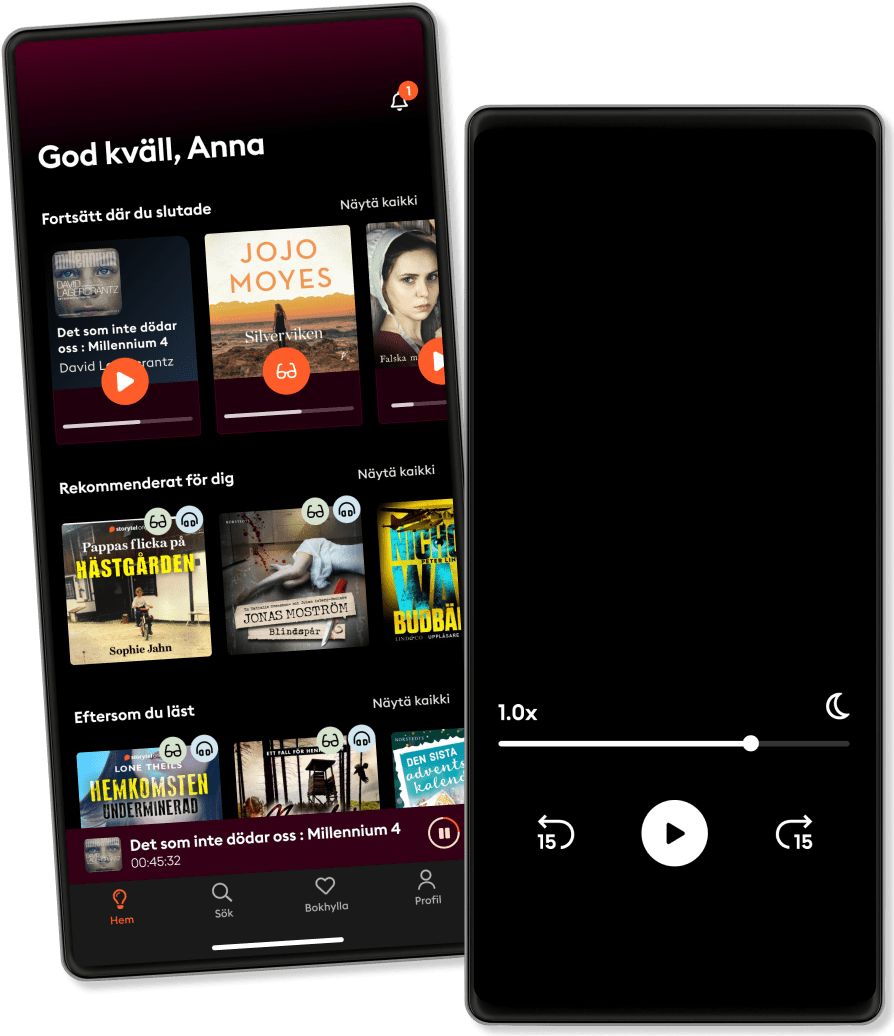

Step into an infinite world of stories

- Read and listen as much as you want

- Over 1 million titles

- Exclusive titles + Storytel Originals

- 14 days free trial, then €9.99/month

- Easy to cancel anytime

Other podcasts you might like ...

- Still Online - La nostra eredità digitaleBeatrice Petrella

- The DailyThe New York Times

- This American LifeThis American Life

- The Witch Trials of J.K. RowlingThe Free Press

- The Book ReviewThe New York Times

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- Dizinin DibiKubilay Tunçer

- Diario Negreira

- A las bravasSER Podcast

- Segunda AcepciónSER Podcast

- Still Online - La nostra eredità digitaleBeatrice Petrella

- The DailyThe New York Times

- This American LifeThis American Life

- The Witch Trials of J.K. RowlingThe Free Press

- The Book ReviewThe New York Times

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- Dizinin DibiKubilay Tunçer

- Diario Negreira

- A las bravasSER Podcast

- Segunda AcepciónSER Podcast

English

International