Enhancing Deep Learning Performance Using Displaced Rectifier Linear Unit

- 언어학습

- 영어

- 형식

- 컬렉션

논픽션

Recently, deep learning has caused a significant impact on computer vision, speech recognition, and natural language understanding. In spite of the remarkable advances, deep learning recent performance gains have been modest and usually rely on increasing the depth of the models, which often requires more computational resources such as processing time and memory usage. To tackle this problem, we turned our attention to the interworking between the activation functions and the batch normalization, which is virtually mandatory currently. In this work, we propose the activation function Displaced Rectifier Linear Unit (DReLU) by conjecturing that extending the identity function of ReLU to the third quadrant enhances compatibility with batch normalization. Moreover, we used statistical tests to compare the impact of using distinct activation functions (ReLU, LReLU, PReLU, ELU, and DReLU) on the learning speed and test accuracy performance of VGG and Residual Networks state-of-the-art models. These convolutional neural networks were trained on CIFAR-10 and CIFAR-100, the most commonly used deep learning computer vision datasets. The results showed DReLU speeded up learning in all models and datasets. Besides, statistical significant performance assessments (p<0.05) showed DReLU enhanced the test accuracy obtained by ReLU in all scenarios. Furthermore, DReLU showed better test accuracy than any other tested activation function in all experiments with one exception.

© 2022 Editora Dialética (전자책): 9786525230757

출시일

전자책: 2022년 3월 25일

다른 사람들도 즐겼습니다 ...

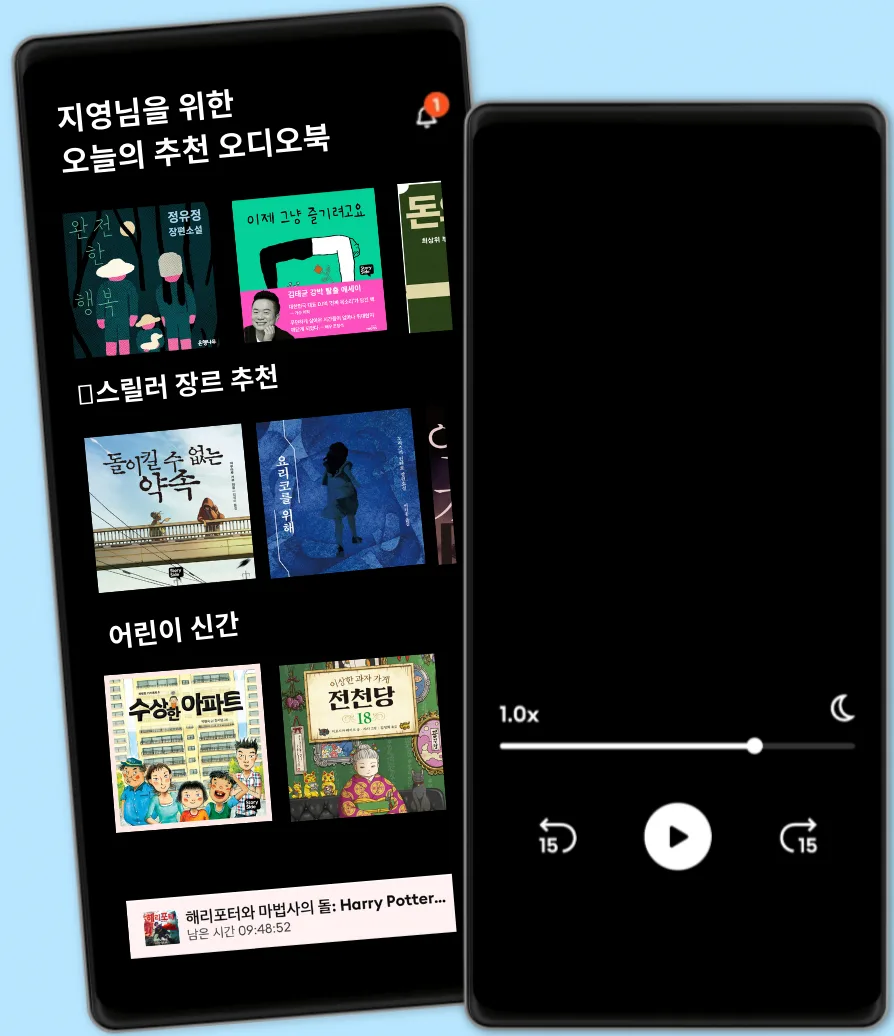

언제 어디서나 스토리텔

국내 유일 해리포터 시리즈 오디오북

5만권이상의 영어/한국어 오디오북

키즈 모드(어린이 안전 환경)

월정액 무제한 청취

언제든 취소 및 해지 가능

오프라인 액세스를 위한 도서 다운로드

스토리텔 언리미티드

5만권 이상의 영어, 한국어 오디오북을 무제한 들어보세요

13800 원 /월

사용자 1인

무제한 청취

언제든 해지하실 수 있어요

패밀리

친구 또는 가족과 함께 오디오북을 즐기고 싶은 분들을 위해

매달 21500 원 원 부터

2-3 계정

무제한 청취

언제든 해지하실 수 있어요

21500 원 /월