- 0 Umsagnir

- 0

- Episode

- 1087 of 1088

- Lengd

- 53Mín.

- Tungumál

- enska

- Gerð

- Flokkur

- Viðskiptabækur

You hear a lot about AI safety, and this idea that sufficiently advanced AI could pose some kind of threat to humans. So people are always talking about and researching "alignment" to ensure that new AI models comport with human needs and values. But what about humans' collective treatment of AI? A small but growing number of researchers talk about AI models potentially being sentient. Perhaps they are "moral patients." Perhaps they feel some kind of equivalent of pleasure and pain -- all of which, if so, raises questions about how we use AI. They argue that one day we'll be talking about AI welfare the way we talk about animal rights, or humane versions of animal husbandry. On this episode we speak with Larissa Schiavo of Eleos AI. Eleos is an an organization that says it's "preparing for AI sentience and welfare." In this conversation we discuss the work being done in the field, why some people think it's an important area for research, whether it's in tension with AI safety, and how our use and development of AI might change in a world where models' welfare were to be seen as an important consideration. Only Bloomberg.com subscribers can get the Odd Lots newsletter in their inbox — now delivered every weekday — plus unlimited access to the site and app. Subscribe at bloomberg.com/subscriptions/oddlots

See omnystudio.com/listener for privacy information.

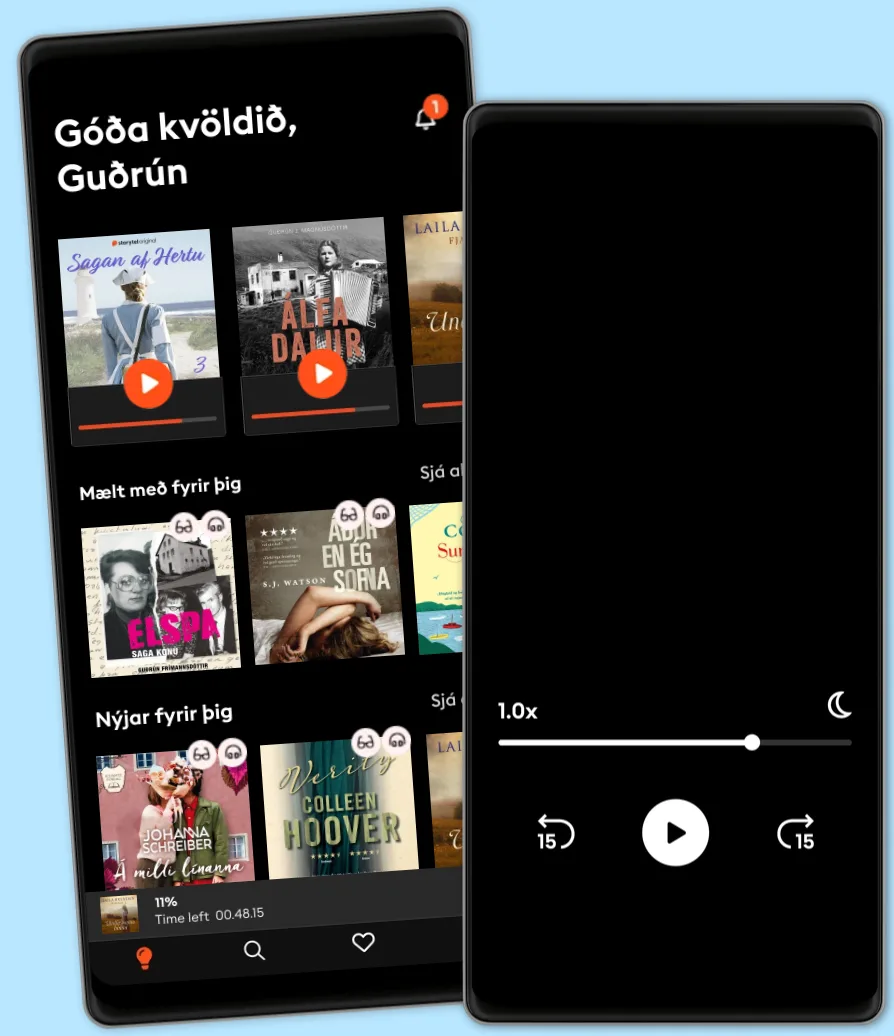

Hlustaðu og lestu

Stígðu inn í heim af óteljandi sögum

- Lestu og hlustaðu eins mikið og þú vilt

- Þúsundir titla

- Getur sagt upp hvenær sem er

- Engin skuldbinding

Other podcasts you might like ...

- HW News Editorial with Sujit NairHW News Network

- Boost Your Career PodcastAudio Pitara by Channel176 Productions

- Tools of Titans: The Tactics, Routines, and Habits of World-Class PerformersTim Ferriss

- The Creative Penn Podcast For WritersJoanna Penn

- The AI in Business PodcastDaniel Faggella

- BrainStuffiHeartPodcasts

- Money Clinic with Claer BarrettFinancial Times

- The Diary Of A CEO with Steven BartlettDOAC

- The School of GreatnessLewis Howes

- The Mindset MentorRob Dial

- HW News Editorial with Sujit NairHW News Network

- Boost Your Career PodcastAudio Pitara by Channel176 Productions

- Tools of Titans: The Tactics, Routines, and Habits of World-Class PerformersTim Ferriss

- The Creative Penn Podcast For WritersJoanna Penn

- The AI in Business PodcastDaniel Faggella

- BrainStuffiHeartPodcasts

- Money Clinic with Claer BarrettFinancial Times

- The Diary Of A CEO with Steven BartlettDOAC

- The School of GreatnessLewis Howes

- The Mindset MentorRob Dial

Íslenska

Ísland