HW News Editorial with Sujit NairHW News Network

Understanding AGI Alignment Challenges and Solutions - with Eliezer Yudkowsky of the Machine Intelligence Research Institute

- Höfundur

- Episode

- 901

- Published

- 25 jan. 2025

- Útgefandi

- 0 Umsagnir

- 0

- Episode

- 901 of 1070

- Lengd

- 43Mín.

- Tungumál

- enska

- Gerð

- Flokkur

- Viðskiptabækur

Today's episode is a special addition to our AI Futures series, featuring a special sneak peek at an upcoming episode of our Trajectory podcast with guest Eliezer Yudkowsky, AI researcher, founder, and research fellow at the Machine Intelligence Research Institute. Eliezer joins Emerj CEO and Head of Research Daniel Faggella to discuss the governance challenges of increasingly powerful AI systems—and what it might take to ensure a safe and beneficial trajectory for humanity. If you've enjoyed or benefited from some of the insights of this episode, consider leaving us a five-star review on Apple Podcasts, and let us know what you learned, found helpful, or liked most about this show!

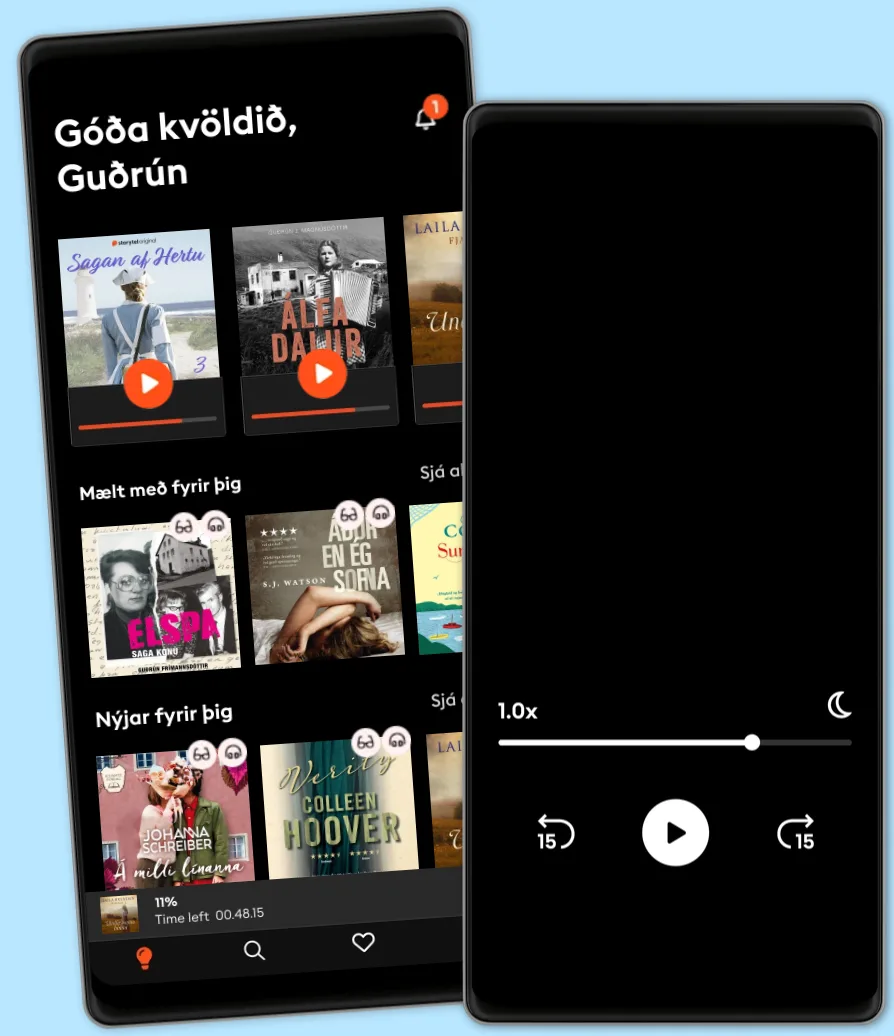

Hlustaðu og lestu

Stígðu inn í heim af óteljandi sögum

- Lestu og hlustaðu eins mikið og þú vilt

- Þúsundir titla

- Getur sagt upp hvenær sem er

- Engin skuldbinding

Other podcasts you might like ...

- Boost Your Career PodcastAudio Pitara by Channel176 Productions

- Tools of Titans: The Tactics, Routines, and Habits of World-Class PerformersTim Ferriss

- The Creative Penn Podcast For WritersJoanna Penn

- FixableTED

- PBD PodcastPBD Podcast

- BrainStuffiHeartPodcasts

- The BreakdownBlockworks

- Reuters World NewsReuters

- Money Clinic with Claer BarrettFinancial Times

- HW News Editorial with Sujit NairHW News Network

- Boost Your Career PodcastAudio Pitara by Channel176 Productions

- Tools of Titans: The Tactics, Routines, and Habits of World-Class PerformersTim Ferriss

- The Creative Penn Podcast For WritersJoanna Penn

- FixableTED

- PBD PodcastPBD Podcast

- BrainStuffiHeartPodcasts

- The BreakdownBlockworks

- Reuters World NewsReuters

- Money Clinic with Claer BarrettFinancial Times

Fyrirtækið

Gagnlegir hlekkir

Tungumál og land

Íslenska

Ísland