Stærke portrætterALT for damerne

Anthropic Head of Pretraining on Scaling Laws, Compute, and the Future of AI

- Höfundur

- Episode

- 291

- Published

- 1 okt. 2025

- Útgefandi

- 0 Umsagnir

- 0

- Episode

- 291 of 304

- Lengd

- 1Klst. 4Mín.

- Tungumál

- enska

- Gerð

- Flokkur

- Óskáldað efni

Ever wonder what it actually takes to train a frontier AI model?YC General Partner Ankit Gupta sits down with Nick Joseph, Anthropic's Head of Pre-training, to explore the engineering challenges behind training Claude—from managing thousands of GPUs and debugging cursed bugs to balancing compute between pre-training and RL. We cover scaling laws, data strategies, team composition, and why the hardest problems in AI are often infrastructure problems, not ML problems.

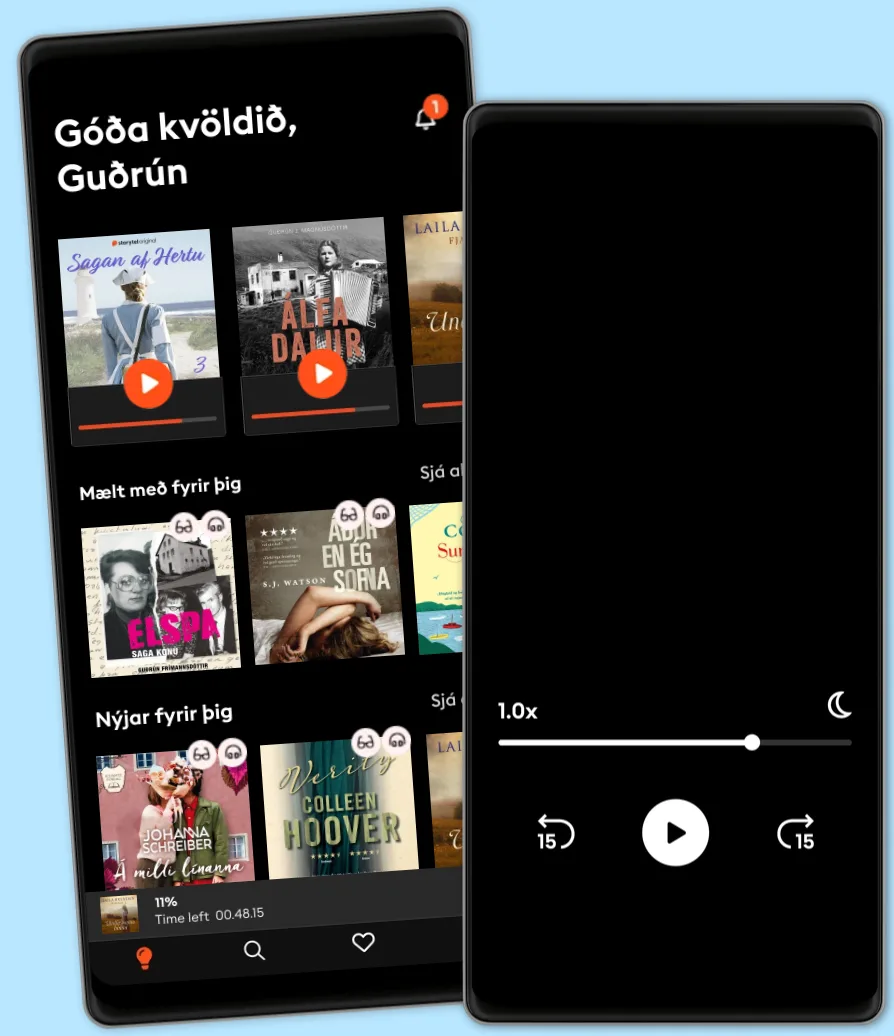

Hlustaðu og lestu

Stígðu inn í heim af óteljandi sögum

- Lestu og hlustaðu eins mikið og þú vilt

- Þúsundir titla

- Getur sagt upp hvenær sem er

- Engin skuldbinding

Other podcasts you might like ...

- Stærke portrætterALT for damerne

- ALT for damerne podcastALT for damerne

- Anupama Chopra ReviewsFilm Companion

- Interviews with Anupama ChopraFilm Companion

- FC PopCornFilm Companion

- Spill the Tea with SnehaFilm Companion

- BodenfalletGabriella Lahti

- Dirty JaneJohn Mork

- DiskoteksbrandenAntonio de la Cruz

- EgyptenaffärenJens Nielsen

Gagnlegir hlekkir

Tungumál og land

Íslenska

Ísland