Human Compatible AI and AGI Risks - with Stuart Russell of the University of California

- Av

- Episod

- 1022

- Publicerad

- 27 sep. 2025

- Förlag

- 0 Recensioner

- 0

- Episod

- 1022 of 1058

- Längd

- 1T 3min

- Språk

- Engelska

- Format

- Kategori

- Ekonomi & Business

In this special weekend edition of the 'AI in Business' podcast's AI Futures series, Emerj CEO and Head of Research Daniel Faggella speaks with Stuart Russell, Distinguished Professor of Computational Precision Health and Computer Science at the University of California and President of the International Association for Safe & Ethical AI. Widely considered one of the earliest voices warning about the uncontrollability of advanced AI systems, Russell discusses the urgent challenges posed by AGI development, the incentives driving companies into a dangerous race dynamic, and what forms of international governance may be necessary to prevent catastrophic risks. Their conversation ranges from technical safety approaches to potential international treaty models, the role of culture and media in shaping public awareness, and the possible benefits of getting AI governance right. This episode was originally published on Daniel's 'The Trajectory' podcast, which focuses exclusively on long-term AI futures. Want to share your AI adoption story with executive peers? Click emerj.com/expert2 for more information and to be a potential future guest on the 'AI in Business' podcast! If you've enjoyed or benefited from some of the insights of this episode, consider leaving us a five-star review on Apple Podcasts, and let us know what you learned, found helpful, or liked most about this show!

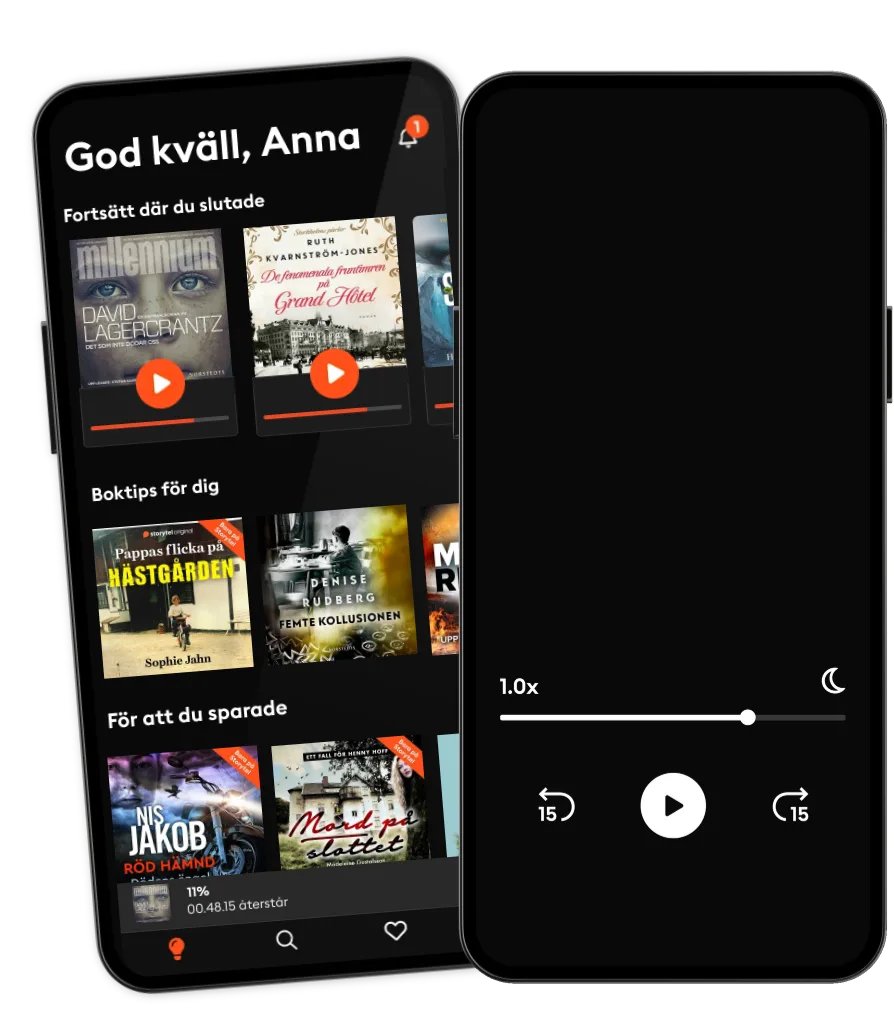

Lyssna när som helst, var som helst

Kliv in i en oändlig värld av stories

- 1 miljon stories

- Hundratals nya stories varje vecka

- Få tillgång till exklusivt innehåll

- Avsluta när du vill

Andra podcasts som du kanske gillar...

- The Journal.The Wall Street Journal & Spotify Studios

- 1,5 graderAndreas Bäckäng

- Self-Compassionate ProfessorPhD

- Redefining CyberSecuritySean Martin

- The Pathless Path with Paul MillerdPaul Millerd

- Coder RadioThe Mad Botter

- Everyone's Talkin' Money | Personal Finance Tips To Stress Less and Live MoreRelationships & Mental Health

- FinansfokusInvesteraMera

- Sustainability Talks – by CordialCordial AB

- Club Shay ShayiHeartPodcasts and Shay Shay Media

- The Journal.The Wall Street Journal & Spotify Studios

- 1,5 graderAndreas Bäckäng

- Self-Compassionate ProfessorPhD

- Redefining CyberSecuritySean Martin

- The Pathless Path with Paul MillerdPaul Millerd

- Coder RadioThe Mad Botter

- Everyone's Talkin' Money | Personal Finance Tips To Stress Less and Live MoreRelationships & Mental Health

- FinansfokusInvesteraMera

- Sustainability Talks – by CordialCordial AB

- Club Shay ShayiHeartPodcasts and Shay Shay Media

Svenska

Sverige