Model inspection and interpretation at Seldon

- Höfundur

- Episode

- 48

- Published

- 17 juni 2019

- Útgefandi

- 0 Umsagnir

- 0

- Episode

- 48 of 343

- Lengd

- 43Mín.

- Tungumál

- enska

- Gerð

- Flokkur

- Óskáldað efni

Interpreting complicated models is a hot topic. How can we trust and manage AI models that we can’t explain? In this episode, Janis Klaise, a data scientist with Seldon, joins us to talk about model interpretation and Seldon’s new open source project called Alibi. Janis also gives some of his thoughts on production ML/AI and how Seldon addresses related problems.

Join the discussion

Changelog++ members support our work, get closer to the metal, and make the ads disappear. Join today!

Sponsors:

DigitalOcean • – Check out DigitalOcean’s dedicated vCPU Droplets with dedicated vCPU threads. • Get started for free with a $50 credit. Learn more at do.co/changelog • .

DataEngPodcast • – A podcast about data engineering and modern data infrastructure.

Fastly • – Our bandwidth partner. • Fastly powers fast, secure, and scalable digital experiences. Move beyond your content delivery network to their powerful edge cloud platform. Learn more at fastly.com • .

Featuring:

• Janis Klaise – GitHub • , LinkedIn • , X • Chris Benson – Website • , GitHub • , LinkedIn • , X • Daniel Whitenack – Website • , GitHub • , X Show Notes:

Seldon Seldon Core Alibi

Books

“The Foundation Series” by Isaac Asimov “Interpretable Machine Learning” by Christoph Molnar

Something missing or broken? PRs welcome!

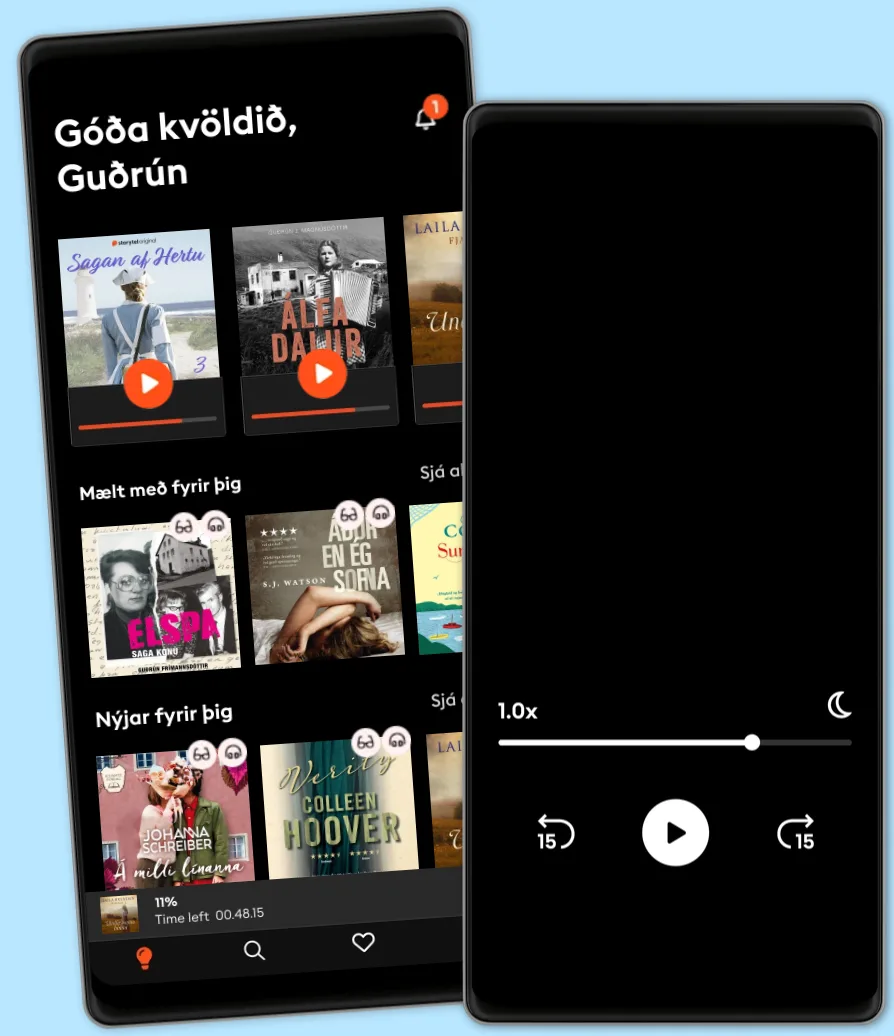

Hlustaðu og lestu

Stígðu inn í heim af óteljandi sögum

- Lestu og hlustaðu eins mikið og þú vilt

- Þúsundir titla

- Getur sagt upp hvenær sem er

- Engin skuldbinding

Other podcasts you might like ...

- Stærke portrætterALT for damerne

- ALT for damerne podcastALT for damerne

- Anupama Chopra ReviewsFilm Companion

- Interviews with Anupama ChopraFilm Companion

- FC PopCornFilm Companion

- Spill the Tea with SnehaFilm Companion

- BodenfalletGabriella Lahti

- Dirty JaneJohn Mork

- DiskoteksbrandenAntonio de la Cruz

- EgyptenaffärenJens Nielsen

- Stærke portrætterALT for damerne

- ALT for damerne podcastALT for damerne

- Anupama Chopra ReviewsFilm Companion

- Interviews with Anupama ChopraFilm Companion

- FC PopCornFilm Companion

- Spill the Tea with SnehaFilm Companion

- BodenfalletGabriella Lahti

- Dirty JaneJohn Mork

- DiskoteksbrandenAntonio de la Cruz

- EgyptenaffärenJens Nielsen

Íslenska

Ísland