- 0 Recensioner

- 0

- Episod

- 14 of 58

- Längd

- 42min

- Språk

- Engelska

- Format

- Kategori

- Personlig Utveckling

Try a walking desk to stay healthy while you study or work! Full notes at ocdevel.com/mlg/15 Concepts Performance Evaluation Metrics • : Tools to assess how well a machine learning model performs tasks like spam classification, housing price prediction, etc. Common metrics include accuracy, precision, recall, F1/F2 scores, and confusion matrices. Accuracy • : The simplest measure of performance, indicating how many predictions were correct out of the total. Precision and Recall • : Precision • : The ratio of true positive predictions to the total positive predictions made by the model (how often your positive predictions were correct). Recall • : The ratio of true positive predictions to all actual positive examples (how often actual positives were captured). • Performance Improvement Techniques Regularization • : A technique used to reduce overfitting by adding a penalty for larger coefficients in linear models. It helps find a balance between bias (underfitting) and variance (overfitting). Hyperparameters and Cross-Validation • : Fine-tuning hyperparameters is crucial for optimal performance. Dividing data into training, validation, and test sets helps in tweaking model parameters. Cross-validation enhances generalization by checking performance consistency across different subsets of the data. The Bias-Variance Tradeoff High Variance (Overfitting) • : Model captures noise instead of the intended outputs. It's highly flexible but lacks generalization. High Bias (Underfitting) • : Model is too simplistic, not capturing the underlying pattern well enough. • Regularization helps in balancing bias and variance to improve model generalization. Practical Steps Data Preprocessing • : Ensure data completeness and consistency through normalization and handling missing values. Model Selection • : Use performance evaluation metrics to compare models and select the one that fits the problem best.

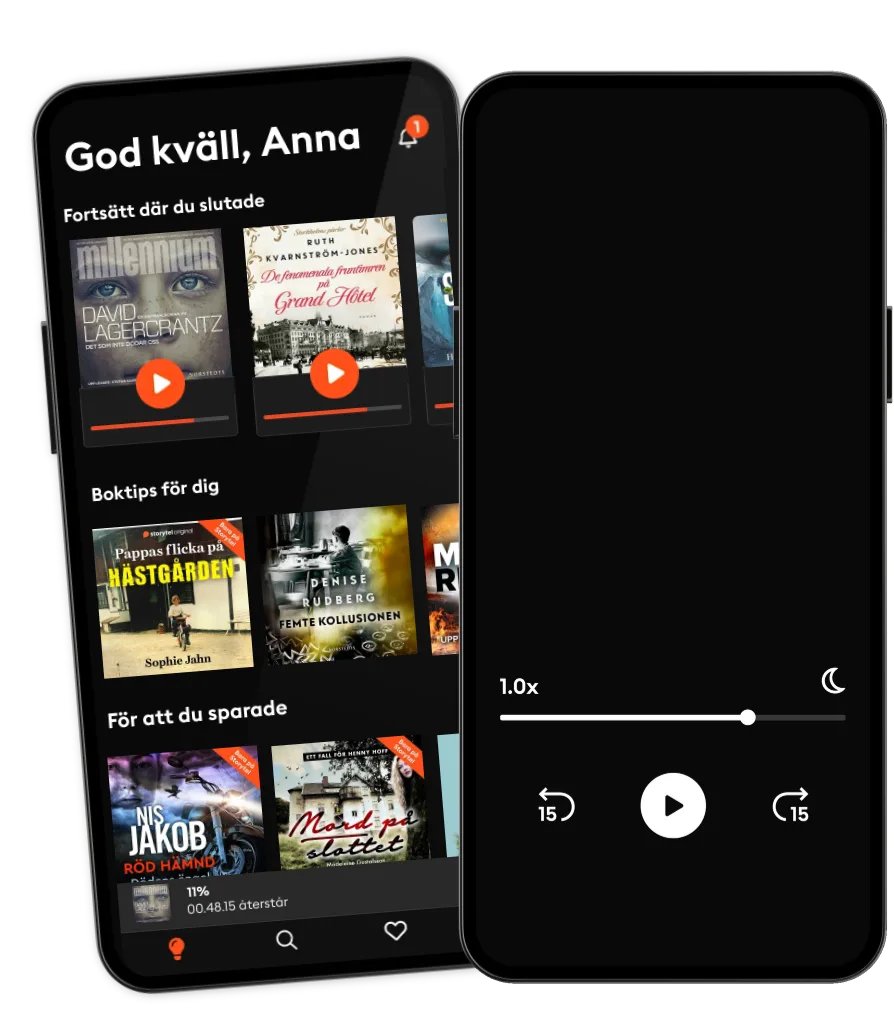

Lyssna när som helst, var som helst

Kliv in i en oändlig värld av stories

- 1 miljon stories

- Hundratals nya stories varje vecka

- Få tillgång till exklusivt innehåll

- Avsluta när du vill

Andra podcasts som du kanske gillar...

- Min historieALT for damerne

- Sotto pressione - Come uscire dalla trappola del burnoutAlessio Carciofi

- IgnifugheFederica Fabrizio

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- SEXPANELETEmma Libner

- VoksenkærlighedAmanda Lagoni

- PengekassenTine Gudrun Petersen

- Min historieALT for damerne

- Sotto pressione - Come uscire dalla trappola del burnoutAlessio Carciofi

- IgnifugheFederica Fabrizio

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- SEXPANELETEmma Libner

- VoksenkærlighedAmanda Lagoni

- PengekassenTine Gudrun Petersen

Svenska

Sverige